Introduction

This document is a guide to my content generation and publication stack as of February 2020.

The goal of my particular technology stack is to make sure that my content persists as long as it possibly can. While it is much easier to create and publish information than ever before, it is also much more easy for that information to degrade. At the extreme ends of archival, it is probably just as easy for documents to survive in the digital era as it is in the paper and ink era--flash drives or hard drives, lost to the ages in dusty back rooms, can have their contents retrieved for the world to access via archival software.

However, in the less extreme case, it is much easier for content to be lost. Old blogs that disappear when we no longer pay for them, or that export in a format that takes some expertise to extract (for example, a MySQL or PostgreSQL database). Formats that are deprecated and hard to find a reader for. Links that change because someone left an institution, or a company switched its blogging platform--all of these are problems that are more severe in an era where information doesn't merely sit and get musty on an old shelf, but rots with ever-changing infrastructure.

As such, as said above my goal is to make sure that the content and thoughts that I generate are as durable as any book, if not moreso. This means that I must put forth some additional effort in setting up a foundation, but that hopefully that will pay off. At the same, due to the constraints of resiliency, there is somewhat of a limit on how complicated of a technical stack I can build for myself because I need to be able to replicate it, offline, in the worst circumstances.

There are different problems in resiliency to consider.

- Are you able to backup and restore your files, distribute them against a single point of failure, and generally make sure you won't lose them?

- Do you have a system easily capable of reading all of your files?

- Do you have an editing environment for your files?

- If you can read them, can you execute scripts, compile code, edit diagrams, etc. in your files?

- For publication, do you have a clean workflow that makes sure that what is published is a subset of what you have available to you, and that minimal changes are needed once published? Can you view it in the published form locally without any sort of internet connection?

Towards this I've tried to pick a set of standards and tools that I can maintain copies of, and despite being different platforms for authoring and publication, will output very similar things.

My choices aren't perfect, but they do meet my needs. There is no 'perfect' pipeline--even completely standard choices are constantly evolving for more standardization for interoperability while adding new features that break interoperability.

We can only try to make sure that at each moment in time, our work of the past is viewable in its entirety.

To summarize the tools I've chosen at this time (January 2020)

- Git

- VS Code (with extensions)

- Obsidian

- Grav (with extensions)

- Python (Anaconda)

In general, there should be very few decisions made that violate the basic principles of Markdown and file linking. Mermaid has been added to make creating charts easier, but may be removed in favor of generating and saving them via Python.\

Authoring Philosophy

While one set of constraints that I've chosen have to do with the ability to make sure I can essentially CRUD the content I create for as long as I want to, another major constraint is making sure that my actual workflow works for me. It can't be stilted--difficult to actually make files go where I want them to, imposing long hours of maintenance, redirects, editing, etc.

Regardless of where I generate content, it should have a 'final home' in a centralized location. This ensures that I never lose the content. Centralized here is meant in the sense that there is a single conceptual location, not a single infrastructural location (described above). This means I only really have to track one final project that serves as a master list of what I've written and created. If there are other tools I output to, I should keep a skeleton of how the content lives in them in my master list.

A minimum ontology should be used for anything that is complete enough to link to. Anything more than having separate folders to represent ingestion and output (such as for Grav) is too much. It is possible that there should be a secondary ontology for notes ingested by other tools as opposed to long form input, as the most likely 'secondary form'.

Generally, I should have to do as little work as possible to move content from my central repository to the output location. As I said above, I'll shadow in my central repository the format it needs to be in the output (again, for resilience). It's possible I might do some kind of thing with a git submodule that makes it easy to pull down into my published location.

While I won't start with it, ideally I should be able to either use some kind of internal tag to choose what gets moved into a specific output folder, or to move it into the folder manually and generate the right format for publication. This would require me to do some extra scripting to look at tags that I put into the headers of my markdown files,though, so it's not on the list currently.

- To the best of my ability, I should make sure that links don't change, both internally and externally. This follows the philosophy of Gwern's "Long Site"

- As I said above, though, aiming for 10-20 years of external links working is reasonable--we'll see if aiming for 100 years of internal links is reasonable in 100 years.

- Ontologies should at least be conceptually similar.

- Taxonomies should also be at least conceptually similar

- The grav theme I've selected (and a number of others) default to 'category' and 'tag'. 'Category' would correspond best to the writing folder (e.g. Samples, Writing, Archive) and tag would correspond to my nested tags.

It is very possible, and indeed likely, that I will want to or need to change my entire architecture eventually. In that case I will want a good way to refactor all of my links. TODO: Python3 Markdown Link Checker One possibility is using regex via VSCode Search.

Resilience

Backup and Restoring Files

The first problem in resiliency I discuss above is the ability to backup and restore files. I am primarily concerned here with the actual content files, as I will discuss backing up files used to read the content below.

For this I've selected Git. I could really pick any type of version control system, including a 'dumb' one like saving zip files with version numbers on them, but I picked Git because it's standard, because there's good editing tooling for markdown on it, because it handles all sorts of other needs I have (large file management perhaps in the future, for example), and generally because it makes my life simple (unless I get into Git hell, of course).

My content is backed up:

- On at least one remote Git repository

- On at least one computer

- Dropbox

- OneDrive

- TODO: Thumbdrives stored in separate locations

While it's likely that over the course of my life I'll still lose something--due to Git/human interface errors, if for no other reason--this type of resiliency will make it much harder to do so.

Not all of my content is in this format yet, but I am slowly moving over everything I can find that I've written since I was 15 years old or so into that format, even into 'archive' form.

Reading, Editing, and Running Files

Text (Markdown)

| Action | Software | Notes |

|---|---|---|

| Read | VS Code | Native markdown support |

| Edit | VS Code | Native markdown support |

| Generate preview HTML | VS Code | Native markdown support |

| Export to PDF | VS Code | Support via Markdown PDF |

| Export to HTML | VS Code | Support via Markdown PDF |

| Export to .PNG | VS Code | Support via Markdown PDF |

| Export to .JPEG | VS Code | Support via Markdown PDF |

| Export to HTML | VS Code | Support via Markdown All-In-One |

I have chosen to make my file type markdown files. There are other files that are peripheral, such as .pdfs and various audiovisual files, but as my own content generation is primarily textual markdown is the most important part. Markdown was selected for a few reasons.

Pros

- Can be read by (nearly?) any text editor

- Relatively simple basic list of rules that extend it

- Allows for more freedom than straight text files while being more human readable than html files

Cons

- Basic spec is very minimal

- Inconsistent implementations of extensions to it-at the time of this writing I'm using VS Code (which implements the CommonMark specification) with the Markdown All-in-One extension, but the Markdown Extended extension would add support for a number of additional markdown capabilities.

- CMS specific (or other software specific) extensions to markdown may encourage adding in lots of gibberish that detracts from the actual content.

Images

| Action | Software | Notes |

|---|---|---|

| Read | VS Code | Native support for (at least) JPG, PNG, GIF |

| Edit | Paint.net | Most static image types |

I'm non-picky about images. VSCode can load common images, and they can all embed in the Markdown.

For image editing I could make sure that the installer for Paint.net was stored with the rest of my files.

PDF Files

| Action | Software | Notes |

|---|---|---|

| Read | VS Code | VSCode-PDF |

PDFs can be loaded natively in some browsers, but the extension VSCode-PDF also handles it. This goes along with the various ways I have to generate PDFs from Markdown, as well, giving a 'compiled' way to send files to other people.

Running code and generating diagrams

The choices I've made above are relatively simple, but as we move from simple filetypes to more complicated actions around running code I'm less sure my choices, and they may change over time.

As it stands today, I don't regularly do much in the way of writing actual software. I'd like this to change, especially so that I can work with products in deeper and more fundamental ways, but I am hardly required to write much code at work (aside from M or Kusto).

If I want to focus on one language to make sure I keep a proper toolchain for, given my workflow desires (run things mostly from VS Code, at least for when I'm writing/playing around) and my professional tendencies (product, data science, cybernetics, etc.) it seems rational to me to work primarily in Python.

This may not always be true, as I have significant interest in a number of things that touch on type theory, so I wouldn't be surprised if in the future I have to find a way to weave in (e.g.), Scala or Haskell, but in the meantime I'm going to focus on making sure my Python toolchain is resilient.

Python

| Action | Software | Notes |

|---|---|---|

| Scripting | Python | Reasons described below |

| Managing Python | Conda | All in one package with package management - link |

| Control systems | Python Control Systems Library (via conda) | Python Control Systems Library |

While as I said above I don't do a lot of day to day coding, I do have a lot of interest in algorithms, data science, some machine learning. I also occasionally have things I want to script. As such, I'm using Python as a general scripting language, as well as the language to learn data science, review my old control systems theory, etc. In the case of a situation where I'm pretty much cut off from package managers and other things like that, the packages that came with conda will cover pretty much any common need.

Diagrams

| Action | Software | Notes |

|---|---|---|

| Basic plotting | Matplotlib (via Conda) | [Matplotlib] |

| Interactive viz | Bokeh (via Conda) | [Bokeh] |

| CSV visualizations | SandDance (via VS Code) | [SandDance for VSCode] |

I enjoy data visualization, but I have mostly done it for work purposes and not for my own writing, something I'd like to change. As I delve deeper into control systems theory again, as well as hopefully into data science, I plan on learning the main Python visualization libraries.

SandDance comes out of a Microsoft research project, and won't require me to script up a visualization every time I want to do something--it comes with its own helpful interface that works in the VS Code system that will let me do light data exploration without any other piece of software.

Publishing Content

I have an interest in sharing what I write with the world. However, because the form I write things initially will likely need to undergo changes for publication (see below), and because I want to maintain resilience despite this, I'd like to make sure that whatever form my site takes I can run it locally as well.

This is not a particularly hard constraint at first blush because you can run pretty much anything on a local server. However, I've written blogs before (such as my illfated startup, Prokalkeo), and I've had an annoying time recovering the content later. Moreover, since my content is authored in markdown, I'm interested in making sure my publication method takes markdown as well.

This implies the usage of a Flat File CMS. While I could use a static site generator, for various reasons to do with administration (both local and remote), I find the idea somewhat unappealing. To solve this, I'm using a flat file CMS that's not static--Grav.

Deployment Setup

| Goal | Software | Notes |

|---|---|---|

| Website Framework | Grav | Grav |

| Convert to grav format | Python/Batch files | - |

| Run Website Locally | Grav - Router.php | Grav - Installation |

| Web hosting and deployment | Azure with Github Autodeployment | Grav - Azure |

Once I have my content written, and I know what content I want to export, I can either manually move it to Grav or I can run a script to move it to Grav (for example, in Grav each file I would normally keep in my writing folder would get its own folder). Once it's there, I can engage with it locally by running the command:

php -S localhost:8000 system/router.phpThis will start my local server, and I can do anything on it that I could do remotely.

Once I want to deploy, following the instruction that I linked above I just push to the master branch of my Github repository, and it will automatically update my website.

Tools

Github

Previously, GitLab was used as the repository of choice. However, due to a desire to simplify the stack and move to-do away from it, we're not relying on any SAAS specific tags. This means I can use Github (which also supports infinite private repos) as well as any other repository. Due to slightly greater trust for Microsoft (if only from having worked there), I prefer Github over Gitlab at this time.

VSCode

VSCode is the primary tool used to edit files in this repository, primarily for the ease of extensibility, git commits, previewing, etc. It also lets us easily run scripts from the same repository we are storing our thoughts, links, etc.

Extensions

There are a number of VSCode Extensions that I have installed for ease of use. I formerly sorted them by importance, but I think that I can instead just group them by 'necessary' and 'unnecessary'.

Necessary extensions are ones that are absolutely needed to make the content in this repository function, or function equivalently to the publishing system. Unnecessary extensions are things that are nice to have for authoring reasons, but hardly a requirement. Finally, there are some extensions that are very specific to my own needs which I'll mention but should probably not be considered directly related to project needs at all.

- Necessary

- Markdown Preview Mermaid Support: Brings Mermaid charts to VSCode for parity with GitLab. SOURCE NOT BACKED UP

- vscode-pdf: Allows us to view pdf files directly in VSCode, in case we don't want to switch windows when working off of them. While slightly less necessary than some of the others, it allows you to not have to keep a Foxit installer around. SOURCE NOT BACKED UP

- Markdown PDF: Allows exporting of Markdown in pdf, html, png, or jpeg. Sample SOURCE NOT BACKED UP

- Markdown All in One provides a number of useful features like automatic TOC generation and updating, improved list editing, outputting to HTML, table formatting, Katex, math formatting, etc. SOURCE NOT BACKED UP

- Python: (From description) "Linting, Debugging (multi-threaded, remote), Intellisense, Jupyter Notebooks, code formatting, refactoring, unit tests, snippets, and more." SOURCE NOT BACKED UP

- Nice to have

- GitLens: Allows us to see when changes were made and by who. General git tool, not necessary. Very useful for tracking how your thoughts on a subject have changed over time though, and requires minimal configuration to do so.SOURCE NOT BACKED UP

- markdownlint: Markdown style checker. Can be annoying if you have two systems configured slightly differently. SOURCE NOT BACKED UP

- WordCounter General wordcounter/reading time for working on readability of essays, etc. SOURCE NOT BACKED UP

- YAML: YAML language support, relevant for working on my website. SOURCE NOT BACKED UP

- Edit csv: Ability to edit CSV files easily with a decent UX. SOURCE NOT BACKED UP

- SandDance for VSCode : Visual exploration of data SOURCE NOT BACKED UP

- Rainbow CSV : Another entry for data exploration and manipulation, Rainbow CSV allows for very clear color coding of columns of data as well as SQL-esque querying of CSV files. Works well for the 'read' half of reading and writing flat files generated by the Edit csv extension. SOURCE NOT BACKED UP

- Markdown Checkbox: Easy shortcut creation of checkboxes as well as checking them off with a timestamp and user selectable formatting (e.g. strikethrough) as well as a feature to give a list of every checkbox in current document, that I don't use. Not particularly necessary. SOURCE NOT BACKED UP

- Unrelated to project

- Live Share Extension Pack: Live Share extension collection

- Live Share: Real-time collaborative development.

- Live Share Audio: Calls with LiveShare SOURCE NOT BACKED UP

- Live Share Chat: Text chat with LiveShare SOURCE NOT BACKED UP

- Peacock SOURCE NOT BACKED UP

- Polacode: Do you particularly care about having pretty screenshots of your code? If so, this is the extension for it. SOURCE NOT BACKED UP

- Testing

- Nested Tags: Taxonomy systems are a commitment. Nested Tags is interesting because it allows multiple layers of nested tags, which essentially allows you a folder alternative. If I'm interested in building my own syntopicon, for instance, I can simply have in my frontmatter "syntopicon/mathematics" or "syntopicon/law" and those tags will apply. Unfortunately it seems like it's inconsistent.

- Deprecated

- [Markdown+Math]: Brings Katex math rendering to VSCode for parity with GitLab. Currently I have this turned off due to the fact that it is centering my non-math tables in an annoying fashion.

- Markdown Preview Enhanced: Deprecated in favor of Markdown All-In-One

Python

While I'm running Python via VSCode with the extensions discussed above, I could in theory run it without. There are lots of ways to run Python, and I'm an expert in precisely zero of them. What I can do with my setup is either run *.py files without leaving my editor, or highlight code in line with markdown and run it.

While I'd like to come back to this section and give more advice on setting up Python at some point, what I did was install Anaconda and then follow this document.

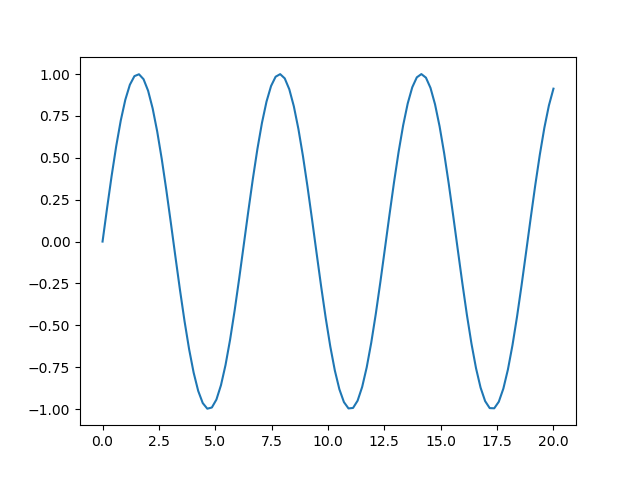

If I highlight the following code, bring up the command palette for VS Code, and select "Python: Run Selection/Line in Python Terminal", it yields the image that follows.

import matplotlib.pyplot as plt

import matplotlib as mpl

import numpy as np

x = np.linspace(0, 20, 100)

plt.plot(x, np.sin(x))

plt.show()

Likewise, if I do the same to the code below except with "Python: Run Selection/Line in Python Interactive Window" it will launch a Python notebook and run the Bokeh image in an interactive window (due to my settings, in a split window to the right of this editing pane).

from bokeh.io import push_notebook, show, output_notebook, reset_output

from bokeh.layouts import row, gridplot

from bokeh.plotting import figure, show, output_file

reset_output()

output_notebook()

import numpy as np

x = np.linspace(0, 4*np.pi, 100)

y = np.sin(x)

TOOLS = "pan,wheel_zoom,box_zoom,reset,save,box_select"

p1 = figure(title="Legend Example", tools=TOOLS)

p1.circle(x, y, legend="sin(x)")

p1.circle(x, 2*y, legend="2*sin(x)", color="orange")

p1.circle(x, 3*y, legend="3*sin(x)", color="green")

show(p1)Other Tools

As I prepare to buy, decorate, and maintain a home, it's been highly suggested to me that I keep a journal for interior design, etc. The number one tool for this is, of course, Pinterest.

However, as of January 2020, Pinterest is still losing money on an annual rate. In a potential recession, I'm reasonably concerned about a significant amount of effort going into tracking fashion, aesthetic, etc., that will disappear with in a puff of IAAS.

Towards this, I have been looking for tools that will allow me to perform many of the curation activities that Pinterest allows for, without the risk of my effort going to zero. Given the amount of time people put into their aesthetic, I don't wish for my effort to disappear. I also don't particularly wish to have something that gives me low to moderate value for a high amount of maintenance.

Pinback

One option I have identified is pinback. To quote the repository:

Pinback is a free, simple bookmarklet that allows you to backup and export your Pinterest data. It runs privately in your web browser and exports your pinned links to a Netscape Bookmark-flavored HTML file. This is the de facto standard among web browsers and services like Delicious, making your data safe and easily portable. “Netscape” also has that wonderful artisanal-aged, vintage-inspired sound to it, don’t you think?

Unfortunately, this only saves the links and not the images, but it's better than going to zero.

Pinry

I'm aware of Pinry, but haven't had a chance to set it up. It's a self hosted, less functional, pinterest clone. That means that, while I certainly have an additional workload to keep it up, I don't have to worry about any kind of work I put into design journals disappearing.